What is Volumetric Video? A Beginner’s Guide to the Next Stage of Video

It’s One of the Fastest Growing New Video Technologies Out There, but What is Volumetric Video and How is it Changing Things? This Beginner’s Guide Will Help Make Sense of it All.

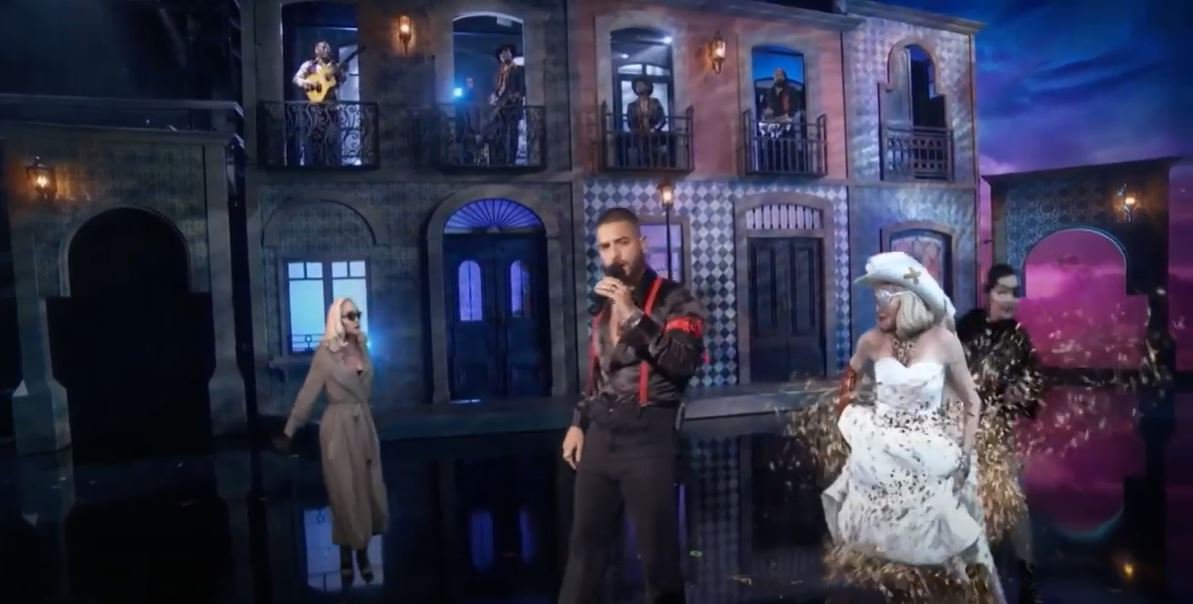

In 2019 at the Billboard Music Awards, attendees and fans watching at home were treated to a live appearance from Madonna, as she performed her latest single, “Medellin.” But after spending decades pushing the boundaries of what a performance can be, the bar of a Madonna stage show was incredibly high. So the star turned to someone she knew without a doubt could keep up: herself. To the surprise and shock of fans around the world, Madonna appeared on stage beside herself. As she sang her latest hit, other Madonnas appeared and danced alongside the original, creating a show unlike any other, and leaving fans to wonder how she pulled it off.

To create a convergence of Madonnas, the performer and her team created photoreal holograms of the singer, made possible by volumetric capture, highlighting the potential for volumetric video across multiple industries, from music to VFX to e-commerce and more.

So, What is Volumetric Capture?

Volumetric capture involves using multiple cameras and sensors to film a subject, creating a full volume recording of the subject, rather than a flat image. Through post production, this captured volume data becomes a volumetric video, which is then viewable from any angle, with realistic depth, color and lighting, on any compatible platform, including mobile devices.

The uses for volumetric video range from creating realistic holograms to highlighting 3D environments to putting together an augmented reality (AR) project and more. It can be used for character creation in VFX and games, or giving potential shoppers a look at what clothes may look like in motion on a real person, from any angle. It can put you directly on the stage with your favorite band, and allow you to control the view during the biggest sporting events in the world.

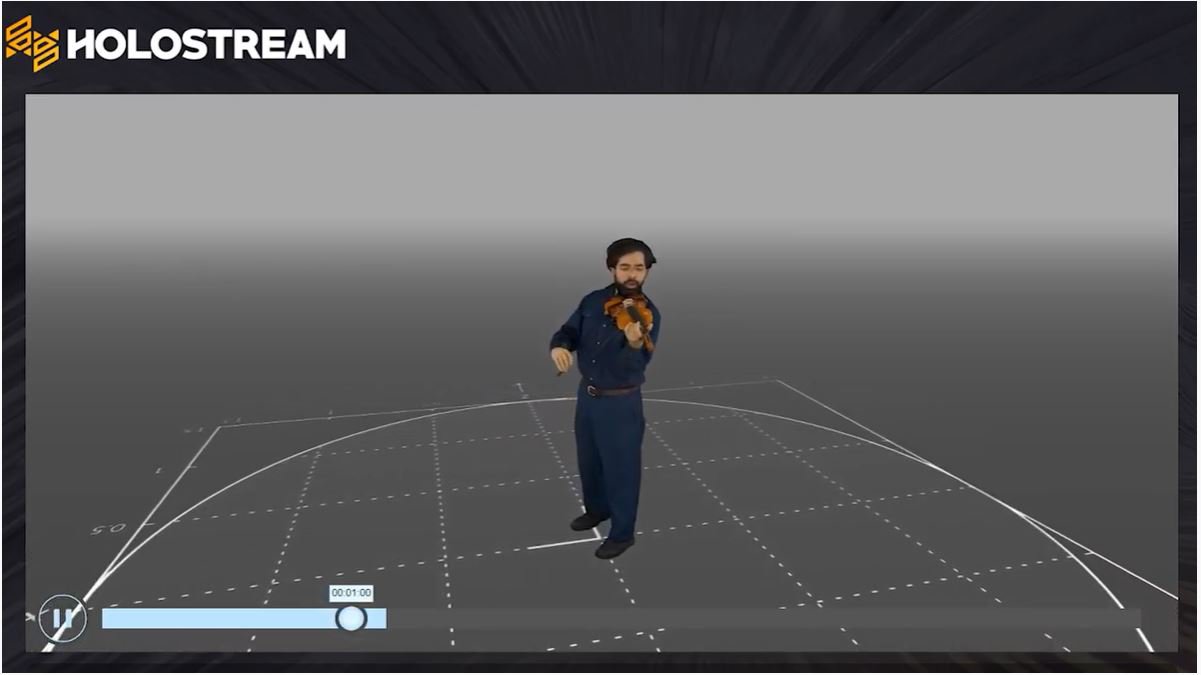

And best of all, it can be accomplished with existing technology. Using dedicated streaming software like Arcturus’ HoloStream, full volumetric videos without a cap on length can be adapted to work even under unstable bandwidths, while 5G is making it easier than ever to consume large files. And while most volumetric videos are created on a specially configured capture stage, consumer LiDAR cameras are changing that.

In short, volumetric video is a new way for people to create and consume videos.

Why Choose Volumetric Content?

At its core, volumetric video offers viewers a more immersive experience. Instead of going to a theater for a concert or a play, volumetric video brings the performance to you by turning the actors or musicians into photoreal holograms you can sit next to. Or, instead of speaking to a bunch of talking heads on a video conference screen, you could stand in front of a full-body digital avatar of the person you're chatting with and walk around them, while they view a photoreal digital double of you. You could learn new dance moves or practice a golf swing, while a photorealistic instructor gives you feedback.

The possibilities are endless, and there is serious money involved. By 2026, the volumetric video market is expected to approach the $5 billion mark, and those are likely conservative estimates. A few of the higher profile uses so far have been:

-

Music: One common use for volumetric video in music is for promotional videos. Artists ranging from Radiohead to Eminem have used the tech for a variety of purposes to represent themselves in volumetric form. Imogen Heap created an immersive music performance for VR headsets on the TheWaveVR platform. As part of a promotion for Samsung phones, members of South Korea's popular band BTS were recreated in photorealistic virtual form, allowing fans to pose for a selfie with their volumetric avatars. More recently, BTS also teamed up with Coldplay for a music video (and subsequent TV performances) of the latter's song "My Universe,” where volumetric avatars of both bands join in each other's performances.

-

Virtual Production and Sports: Entire landscapes have been captured using volumetric methods, then added to a game engine. The digitized backgrounds can then be displayed on LED screens set against a stage, giving performers the ability to immerse themselves in the virtual worlds. Sporting events have also benefited from volumetric video. You see it each time a live NFL game shows a replay where the camera angle rotates around the action. Soon, viewers will have the opportunity to choose the angle they want, all in real time.

-

Fashion: Japanese fashion brand ANAYI is one of several clothing brands using volumetric capture to highlight collections. The clothes are worn and demonstrated by models in interactive volumetric videos accessible on browsers or via AR on mobile devices, allowing potential purchasers to truly see how the garments drape and move with the wearer — far more than a static, 2D photo ever could.

-

Documentaries: Volumetric video is also being deployed for more serious purposes, such as documentary filmmakers bringing more attention to charitable causes. An attention-grabbing AR experience created using the Arcturus HoloSuite allowed multi-national bank Santander to generate awareness around the multiple aspects of homelessness. Another five-minute volumetric video of a nurse living out of her car highlighted the fact that people who have jobs still cannot afford housing. By using volumetric video, each campaign carried an added emotional impact by putting viewers into the respective worlds and next to the subjects.

Content for these types of experiences is being created by production companies, artists and developers all over the world in volumetric capture studios, and in more and more cases, using consumer-grade LiDAR cameras. According to recent research carried out by Arcturus, the number of volumetric capture studios grew 45% from January 2021 to December 2021, while LiDAR is becoming more readily available. Volumetric capture is a worldwide phenomenon and it's still growing.

How to Create to Volumetric Videos

Volumetric video can be created using one of several volumetric capture techniques, including the combination of existing technologies. A few of the the most common ways to engage in volumetric capture are:

-

Photogrammetry: Multiple photos of a subject are taken from various angles and stitched together by computational algorithms to generate 3D models.

-

LiDAR: A relatively new technique, LiDAR (an acronym for laser imaging, detection and ranging) is a method of laser scanning used for measuring and recording landscapes or buildings in order to create 3D scans and depth maps. LiDAR produces 3D point clouds, which account for every point in space, in a scene or object. Instead of pixels in 2D scenes, such points are represented by voxels, which don't just possess x and y coordinates, but also z (depth information).

-

Motion and Performance Capture: Markers are placed on the subject, and sensors (including precision cameras) record the movement of those markers in 3D. That data is then remapped into CG models.

-

360° Video Cameras: Multiple, synced arrays of cameras capture 360° video, for use with 3D glasses and VR headsets.

-

Light Field Cameras: Cameras that measure the intensity of light in a scene, along with the precise direction that the light is traveling. Using micro-lenses, users can capture an image, then adjust lighting, perspective and focal points in post-production.

Now, point-accurate, photorealistic holograms — built up using depth, motion and light measurements — can exist on almost any monitor, VR headset or mobile device in a live space or rendered environments (or through dedicated devices like the Looking Glass). Users can have an immersive experience through volumetric scenes, or encounter a volumetric hologram in real time. They can change the lighting or focus, or move around in any direction. Once the hologram has been created, exploration is limitless.

The Rise of Volumetric Entertainment

Volumetric video is helping to redefine several forms of entertainment, and promises to speed up production schedules. One of the biggest proponents for using volumetric video in film is Diego Prilusky, the former head of Intel Studios. Prilusky recently oversaw the creation of a massive 10,000-square foot custom-built domed studio, created to explore the possibilities of volumetric capture. He even hosted a TED talk discussing the potential of the technology by describing a recent use of the studio.

Intel Studios recruited movie set designers to create a desert town, complete with sand, trees and appropriate scenery. For the performers on stage, it was the same as a traditional set, but instead of using a handful of large cameras, hundreds of specialized cameras recorded every point within the dome.

With the filming complete and the enormous amount of data generated compressed, a volumetric video was produced of the sequence. Viewers could travel through the scene and completely explore the action from any direction and any viewpoint — even that of the horse — all in real time.

The technology was put to the test in a partnership with Paramount Pictures to explore immersive media in a Hollywood movie production. Together with legendary director Randal Kleiser (The Blue Lagoon, Flight of the Navigator), Prilusky's Intel Studios team reimagined Kleiser’s iconic 1978 movie Grease as a multi-user, location-based experience, ultimately recording a short song and dance sequence using volumetric capture. During his TED presentation and using just an iPad with an AR view, Prilusky was able to bring the Grease XR performers onto the stage in hologram form, and walk among them as they sang and danced to "You're The One That I Want."

Although the potential for immersive storytelling is clear, volumetric video is for more than just film or even entertainment. Along with film stages, sporting events and concerts, virtual sets are becoming common in live TV and weather studios as well.

Real-time game engines from pioneers like Epic Games (Unreal Engine) and Unity Technologies have also been critical to the rise of volumetric video, delivering interactivity and virtual studios to the public. Along with a democratization in game development, the rise of publicly-accessible game engines — along with volumetric video — have led to the rise of new techniques like virtual production.

Getting Started With Volumetric Video

So how do you get started? Well, the answer might be in the palm of your hand. LiDAR and light field cameras used to be prohibitively expensive for the average user, but Apple recently placed LiDAR cameras in the iPhone 12 Pro and iPhone 13 Pro models, and it's been in iPad Pro models since 2020. It’s not nearly as robust as a full capture lab (yet), but creators can turn their Apple devices into a volumetric video camera, albeit a limited one. Record3D from Marek Simonek, Scandy Pro from developer Scandy and Volugrams’ mobile content creation app Volu have all begun to take advantage of this, enabling volumetric capture from a single camera viewpoint on supported devices. The result is a simple yet eye-catching 3D video reconstruction.

At the very high end are dedicated volumetric capture studios, such as Canon's Volumetric Studio Kawasaki. Situated in Tokyo, it uses the company's Free Viewpoint Video System to create content like the rappers chelmico’s 2020 live stream event, that saw the duo perform on shifting backgrounds. Using more than 100 dedicated 4K cameras and Canon's proprietary image processing technology, high-detail video and 3D data can be generated almost simultaneously with the volumetric capture, according to the company.

You can also book a multi-camera shoot at Microsoft's Mixed Reality Capture Studio in San Francisco, or through one of its licensed studio partners, such as Metastage in Los Angeles or Dimension in London (responsible for the Coldplay and BTS video, and Balenciaga's Afterworld experience). Microsoft has also been building on its Kinect technology to create the Azure Kinect DK system, which further enables volumetric capture workflows. The kit includes a 12-megapixel RGB camera, supplemented by a 1-megapixel depth camera for body tracking, a 360-degree seven-microphone array and an orientation sensor.

On top of that, Microsoft released a software developer kit (SDK) for the Azure hardware that enables full-resolution capture with no frame dropping, 4K color, variable FOV and manual control over image settings, including exposure and color temperature. Several developers have built tools to take advantage of the hardware as well. For example, Volcapp from EF EVE can control a small number of connected Azure Kinect cameras from a single PC. It can convert the combined output to produce a 360° overlapping point cloud of a full-body capture.

Companies like Scatter have also developed Depthkit software to create Depthkit Cinema, a portable 8K volumetric capture rig paired with consumer video cameras like the Kinect and Intel’s RealSense cameras. It also offers Depthkit Studio, a multi-sensor, full-body, in-house capture facility that can record human performances, "for teleconferencing, character assets for gaming or digital beings for Hollywood."

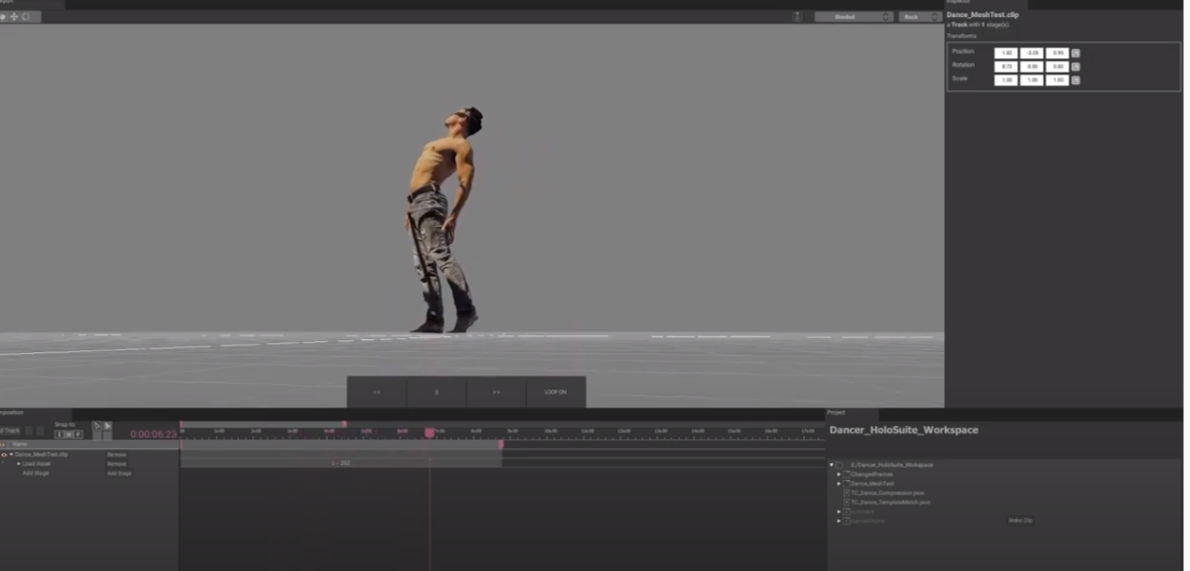

Once the data has been captured, users can then utilize software tools like Arcturus’ HoloEdit, the first non-linear editor and post-production tool for volumetric video. The capture-agnostic software has several plug-ins available that allow data to be uploaded directly into HoloEdit. From there, users can edit and refine multiple types of volumetric video content. Geometry and textures can be cleaned-up, performances edited and cues added for dynamic interaction and branching narratives. The video can then be compressed and exported for direct distribution, or uploaded to game engines.

Streaming Volumetric Video

Many of the hurdles involved in the creation of volumetric video have been overcome, but the Holy Grail is to be able to stream photorealistic volumetric content to any device.

Imagine being able to watch a sporting event and have 360-degree replays of a goal or a touchdown (or a penalty if you want to get mad at the refs). You could watch a player from multiple perspectives, slow the action down and analyze any part of a replay, then return to the live game, or watch it all on a mobile device with the game on your TV. It’s all possible right now using existing technology, but the problem is the sheer amount of data involved.

Raw volumetric video files can be massive. For example, at the Rugby World Cup 2019 in Japan, Canon was tasked with providing the International Games Broadcast Services (IGBS) with highlight videos of six matches. To handle the volumetric capture of the action, the team relied on a dedicated truck parked outside the stadium containing high-performance servers, providing IGBS with highlight videos from each match within an hour of the final whistle. The processing power required to live stream an event like that and in-full to consumers is massive and requires dedicated hardware. And then there’s the matter of streaming the data to users, although that has been solved with emerging software like Arcturus’ HoloEdit.

HoloEdit contains a proprietary compression tool to make the file more manageable, and users can also make use of HoloCompute, a cloud computing service for fast, parallel processing for editing, stabilization and compression.

With the file itself complete, volumetric video creators can then use tools like Arcturus’ HoloStream, which offer an adaptive bitrate streaming solution. In HoloStream, volumetric video files are encoded into a proprietary format, then multiple versions of the file are stored onto a server, ranging in quality from low to high. Similar to how Netflix and other global giants operate, a CDN controls delivery of the best possible quality 3D stream based on the user's available bandwidth and device capabilities.

Arcturus also recently entered into a partnership with Japan's top wireless carrier, NTT DOCOMO, to stream volumetric videos to any mobile device using 5G networks. The content will still work on 4G without issue, but the new faster speeds of 5G are ideal for the larger files.

Given the potential for more immersive experiences, and the ever-increasing competition in nearly every field that can benefit from multimedia, the growth of volumetric video is all but assured. All that’s needed now are the creators that will help usher in the next generation of video. And they are already here.

Ready to start creating volumetric video? Get in touch and learn how Arcturus can help you.